What is Text Mining?

Widely used in knowledge-driven organizations, text mining is the process of examining large collections of documents to discover new information or help answer specific research questions.

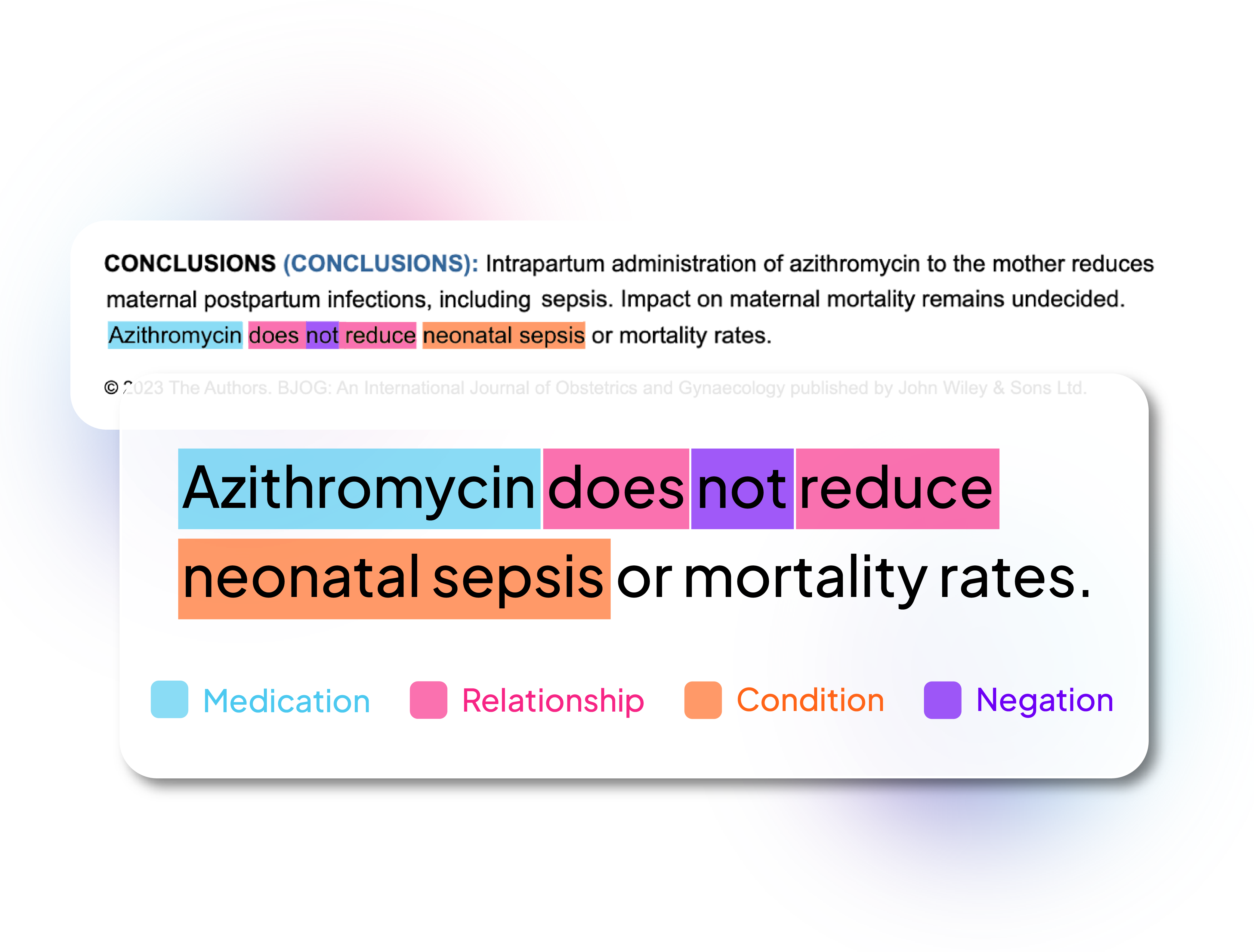

Text mining identifies facts, relationships and assertions that would otherwise remain buried in the mass of textual big data. Once extracted, this information is converted into a structured form that can be further analyzed, or presented directly using clustered HTML tables, mind maps, charts, etc. Text mining employs a variety of methodologies to process the text, one of the most important of these being Natural Language Processing (NLP).

The structured data created by text mining can be integrated into databases, data warehouses or business intelligence dashboards and used for descriptive, prescriptive or predictive analytics.

What is Natural Language Processing (NLP) for Healthcare?

Natural Language Processing (NLP) helps machines “read” textual information by simulating the human ability to understand, interpret, and generate language. It aims to seal the gap of communications between humans and computers by facilitating a natural language interface. The key aspect of NLP is natural language understanding, which describes the ability of a system to “read” or “listen” – recognize and generalize the contextual meanings embedded in various text expressions. Another key and popular aspect of NLP is natural language generation, aiming at generating meaningful language representations to “talk back” to human. Popular applications enabled by NLP include chatbots, question-answering systems, summarization tools, machine translation services, voice assistants etc. Clinical NLP or healthcare NLP is fine tuned to understand medical and scientific concepts and is particularly useful in extracting information from unstructured clinical notes.

Traditionally, linguistic rule-based methods and machine learning methods such as conditional random fields have been used for tasks associated with natural language understanding. Since 2017, there has been an explosion of transformer models ranging from BERT to large language models (LLMs) like ChatGPT. This provides a great opportunity for creating a powerful hybrid NLP system which ensembles various technologies to get the best results depending on the task required.

Today’s NLP systems can analyze unlimited amounts of text-based data without fatigue and in a consistent manner. They can understand concepts within complex contexts and decipher ambiguities of language to extract key facts and relationships or provide summaries. Given the huge quantity of unstructured data that is produced every day, from electronic health records (EHRs) to social media posts, this form of automation has become critical to analyzing text-based data efficiently.

For those working in healthcare and the more regulated parts of pharmaceuticals understanding the NLP outputs and methods are important. Auditable, explainable, and trusted AI technology is paramount.

Machine Learning, Large Language Models, and Natural Language Processing

Machine learning is an artificial intelligence (AI) technology which provides systems with the ability to automatically learn from patterns embedded in existing data and make predictions on new data.

Traditionally, machine learning methods require well-curated training data, however, this is typically not available from sources such as electronic health records (EHRs) or scientific literature where most of the data is unstructured text. The evolutionary transformer model, BERT, introduced the idea of fine-tuning by incorporating a model pre-trained in one domain in the training process, which made it possible to significantly reduce the amount of training data required for text mining tasks.

Recently, the impressive abilities of large language models (LLMs) in understanding human language and generate realistic text has attracted whole world’s attention to NLP. LLMs have shown great ability when used in general topics such as daily conversation and paraphrasing, which has prompted many pharmaceutical and healthcare organizations to evaluate LLMs in biomedical and healthcare domain applications.

When applied to EHRs, clinical trial records or full text literature, NLP can extract the clean, structured data needed to drive the advanced predictive models used in machine learning, thereby reducing the need for expensive, manual annotation of training data.

Ontologies, Vocabularies and Custom Dictionaries

Ontologies, vocabularies and custom dictionaries are powerful tools to assist with search, data extraction and data integration. They are a key component of many text mining tools, and provide lists of key concepts, with names and synonyms often arranged in a hierarchy.

Search engines, text analytics tools and natural language processing solutions become even more powerful when deployed with domain-specific ontologies. Ontologies enable the real meaning of the text to be understood, even when it is expressed in different ways (e.g. Tylenol vs. Acetaminophen). NLP techniques extend the power of ontologies, for example by allowing matching of terms with different spellings (Estrogen or Oestrogen), and by taking context into account (“SCT” can refer to the gene, “Secretin”, or to “Stair Climbing Test”).

The specification of an ontology includes a vocabulary of terms and formal constraints on its use. Enterprise-ready natural language processing requires range of vocabularies, ontologies and related strategies to identify concepts in their correct context:

- Thesauri, vocabularies, taxonomies and ontologies for concepts with known terms;

- Pattern-based approaches for categories such as measurements, mutations and chemical names that can include novel (unseen) terms;

- Domain-specific, rule-based concept identification, annotation and transformation;

- Integration of customer vocabularies to enable bespoke annotation;

- Advanced search to enable the identification of data ranges for dates, numerical values, area, concentration, percentage, duration, length and weight.

Linguamatics provides several standard terminologies, ontologies and vocabularies as part of its natural language processing platform.